I have two tables: logger_data and MPCable. Logger_data table has hourly gage data records consisting of columns for site_serial (unique for each gage), date-time, water pressure (ch1_data_P), barometric pressure (ch2_data_P), temperature, and other not so very important data. MPCable table has information of cable length (for each site_serial) required for calculating water levels at each gage. The hourly data in logger table is not perfect, has several values that are outside acceptable data range for each gage. I want a final table that would have daily average water level records for each gage. When averaging water levels for each day, I want the query to exclude out-of-range values. Each gage has different acceptable range. I designed an insert query using a combination of IIf and SWITCH function. The logger data table gets updated with new data in every 2-3 days and I want to run this query to add the new average data into "Combined" table with desired output. Here's the query I have designed in SQL:

Initially I used nested IIf's but it gave complexity error after 8 statements. So I am now using SWITCH instead (Still using IIf to specify acceptable data range values for each gage). Couple of questions now:

1. The designed query structure is very basic and has room for improvements. How can I make it more simplified?

2. The designed query is working great for the 9 gage stations. But if I were to add in more statements for more gages in the future, will it create any complexity problem (similar to using too many nested IIf statements)?

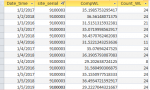

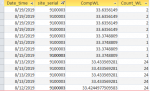

3. The designed query is giving desired results but duplicating records of daily average values for every gage (sometimes 2, sometimes 4-5 same rows). Not that it's a problem as such, but it will be great if I can avoid the duplicate values if using the above designed query. Also why in the first place running that query gives duplicates?

Code:

INSERT INTO Combined ( [Date], site_serial, CountyID, CompWL, Count_WL )

SELECT Format([Date_time],"mm/dd/yyyy") AS [Date], logger_data.site_serial, MPCable.CountyID, Switch([logger_data]![site_serial]=9100001,(Avg([MPC]-[MPH]-([ch1_data_P]-2.307*(IIf((([ch2_data_P]>=13.93) And ([ch2_data_P]<=15.36)),[ch2_data_P],Null))))),[logger_data]![site_serial]=9100002,(Avg([MPC]-[MPH]-([ch1_data_P]-2.307*(IIf((([ch2_data_P]>=13.79) And ([ch2_data_P]<=15.15)),[ch2_data_P],Null))))),[logger_data]![site_serial]=9100003,(Avg([MPC]-[MPH]-([ch1_data_P]-2.307*(IIf((([ch2_data_P]>=13.71) And ([ch2_data_P]<=15.06)),[ch2_data_P],Null))))),[logger_data]![site_serial]=9100004,(Avg([MPC]-[MPH]-([ch1_data_P]-2.307*(IIf((([ch2_data_P]>=13.91) And ([ch2_data_P]<=15.26)),[ch2_data_P],Null))))),[logger_data]![site_serial]=9100005,(Avg([MPC]-[MPH]-([ch1_data_P]-2.307*(IIf((([ch2_data_P]>=13.81) And ([ch2_data_P]<=15.17)),[ch2_data_P],Null))))),[logger_data]![site_serial]=9100006,(Avg([MPC]-[MPH]-([ch1_data_P]-2.307*(IIf((([ch2_data_P]>=13.7) And ([ch2_data_P]<=15.3)),[ch2_data_P],Null))))),[logger_data]![site_serial]=9100007,(Avg([MPC]-[MPH]-([ch1_data_P]-2.307*(IIf((([ch2_data_P]>=13.5) And ([ch2_data_P]<=14.84)),[ch2_data_P],Null))))),[logger_data]![site_serial]=9100008,(Avg([MPC]-[MPH]-([ch1_data_P]-2.307*(IIf((([ch2_data_P]>=13.94) And ([ch2_data_P]<=15.33)),[ch2_data_P],Null))))),[logger_data]![site_serial]=9100009,(Avg([MPC]-[MPH]-([ch1_data_P]-2.307*(IIf((([ch2_data_P]>=13.7) And ([ch2_data_P]<=15.3)),[ch2_data_P],Null)))))) AS CompWL, Count(logger_data.ch1_data_P) AS Count_WL

FROM logger_data INNER JOIN MPCable ON logger_data.site_serial = MPCable.site_serial

GROUP BY Format([Date_time],"mm/dd/yyyy"), logger_data.site_serial, MPCable.CountyID;Initially I used nested IIf's but it gave complexity error after 8 statements. So I am now using SWITCH instead (Still using IIf to specify acceptable data range values for each gage). Couple of questions now:

1. The designed query structure is very basic and has room for improvements. How can I make it more simplified?

2. The designed query is working great for the 9 gage stations. But if I were to add in more statements for more gages in the future, will it create any complexity problem (similar to using too many nested IIf statements)?

3. The designed query is giving desired results but duplicating records of daily average values for every gage (sometimes 2, sometimes 4-5 same rows). Not that it's a problem as such, but it will be great if I can avoid the duplicate values if using the above designed query. Also why in the first place running that query gives duplicates?

Last edited: