You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

ChatGPT: The Future of AI is Here!

- Thread starter Jon

- Start date

Isaac

Lifelong Learner

- Local time

- Today, 02:11

- Joined

- Mar 14, 2017

- Messages

- 11,499

That is pretty cool ...

Though I enjoy playing devil's advocate to raise questions about things that I sense the public is 'assuming', that does not mean I don't think it is pretty darn awesome.

One thing your posts have served to teach/emphasize for me is how much the ability to give it precise instructions can be leveraged more than first meets the eye.

This thing is pretty cool ...

I'm sure as time goes on, people will figure out more and more ways to subject it to Tests of all kinds.

I am trying to imagine a scenario where a person in a field who is knowledgeable, versus a more entry-level person in a field, each tries to solve a problem. One uses ChatGPT and the other uses more manual research methods. They are timed and the business scrutinizes the results, process, methodology, etc. Figures out how much they can use ChatGPT and how much they can't, or more accurately generates cost savings/etc.

Can't imagine all the nuts and bolts of how that would work, but that sort of thing is bound to become needed to help businesses determine the proper approach to Reliance on it, risks, rewards, savings, etc.

Though I enjoy playing devil's advocate to raise questions about things that I sense the public is 'assuming', that does not mean I don't think it is pretty darn awesome.

One thing your posts have served to teach/emphasize for me is how much the ability to give it precise instructions can be leveraged more than first meets the eye.

This thing is pretty cool ...

I'm sure as time goes on, people will figure out more and more ways to subject it to Tests of all kinds.

I am trying to imagine a scenario where a person in a field who is knowledgeable, versus a more entry-level person in a field, each tries to solve a problem. One uses ChatGPT and the other uses more manual research methods. They are timed and the business scrutinizes the results, process, methodology, etc. Figures out how much they can use ChatGPT and how much they can't, or more accurately generates cost savings/etc.

Can't imagine all the nuts and bolts of how that would work, but that sort of thing is bound to become needed to help businesses determine the proper approach to Reliance on it, risks, rewards, savings, etc.

- Local time

- Today, 10:11

- Joined

- Sep 28, 1999

- Messages

- 8,064

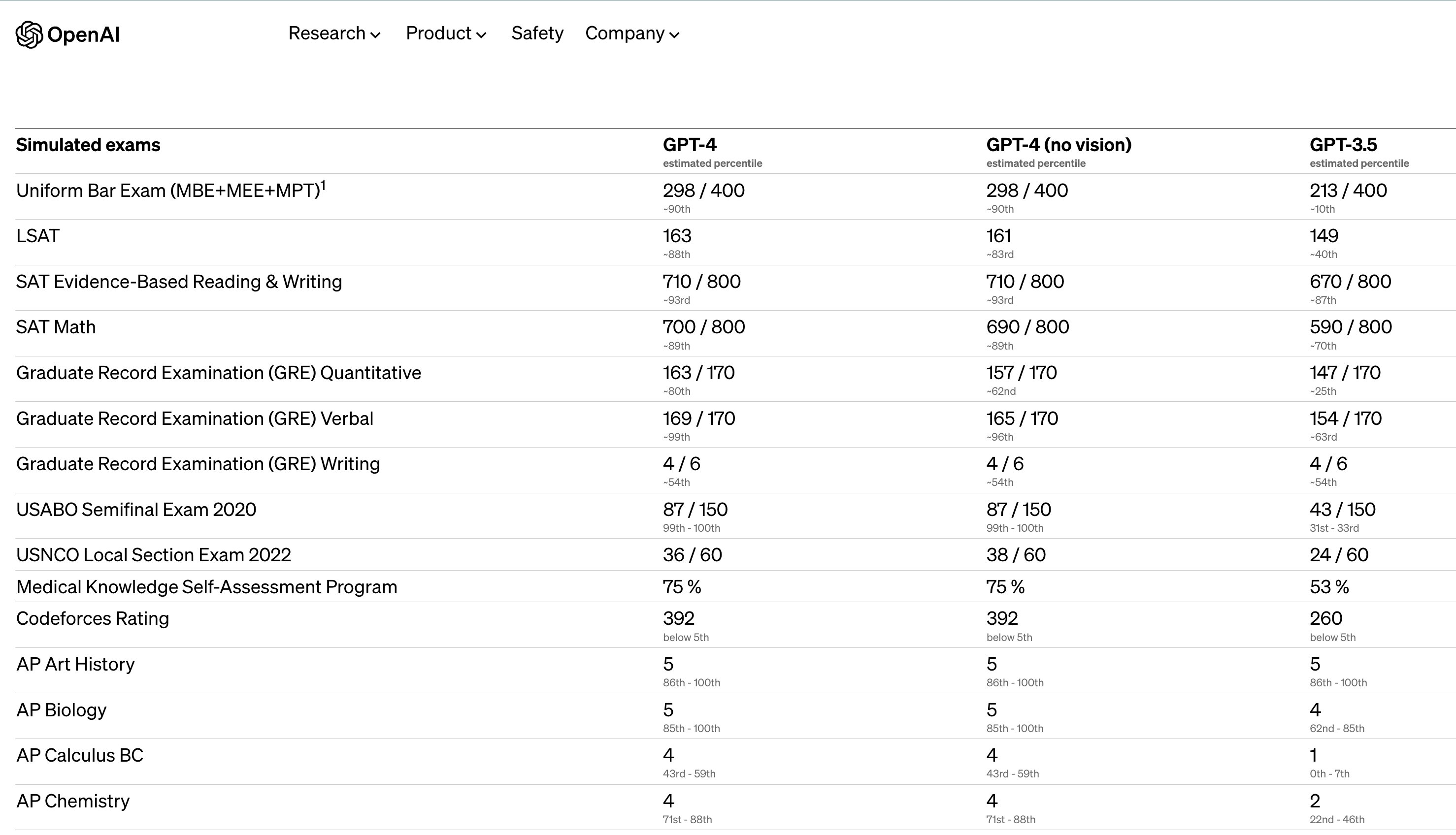

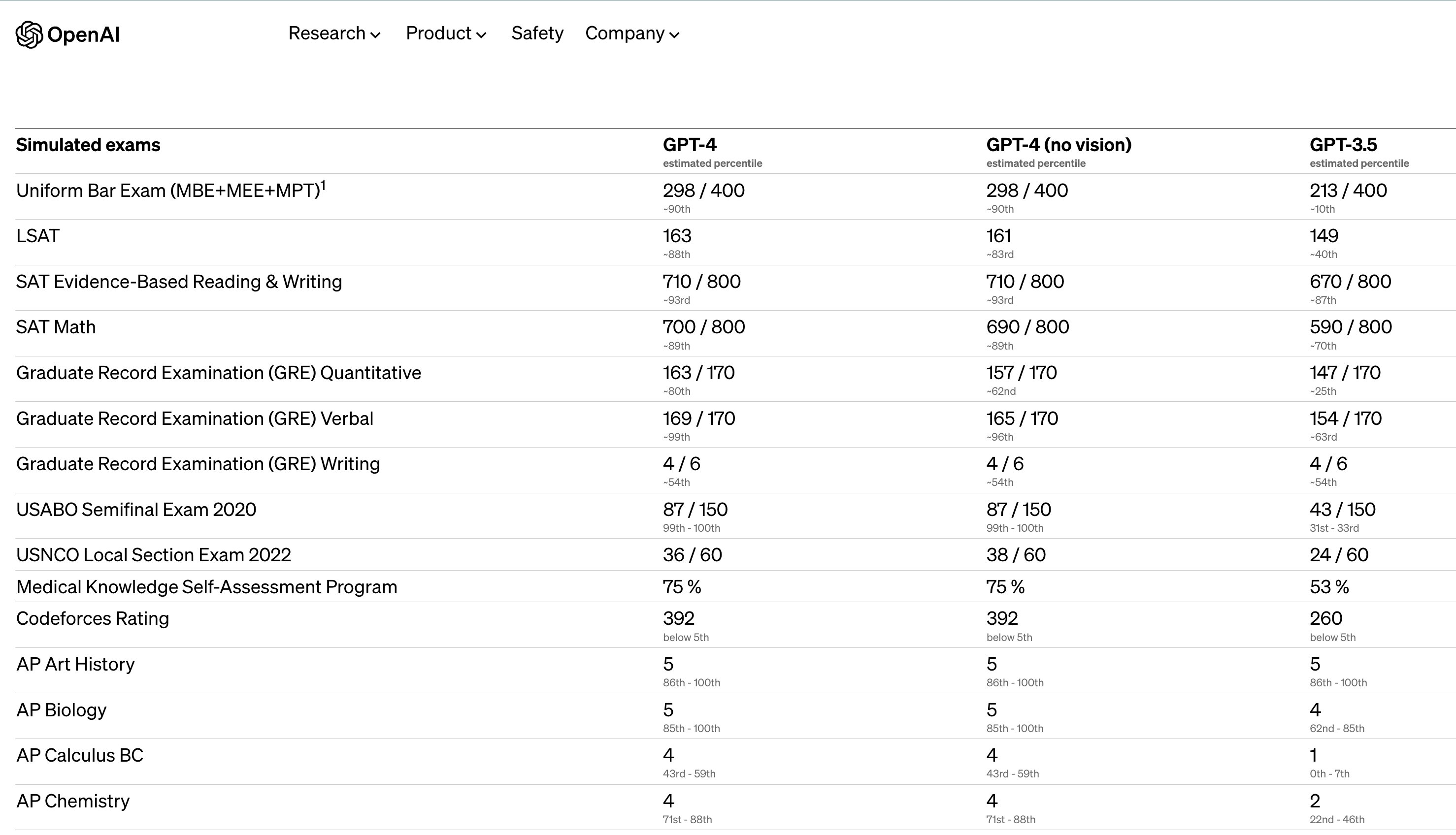

Some analysis has already been done with Github Copilot. They state how much time programmers have been saving. However, GPT 4 is another magnitude more advanced. Check out how it compares on exams to GPT 3.5.

AccessBlaster

Be careful what you wish for

- Local time

- Today, 02:11

- Joined

- May 22, 2010

- Messages

- 7,595

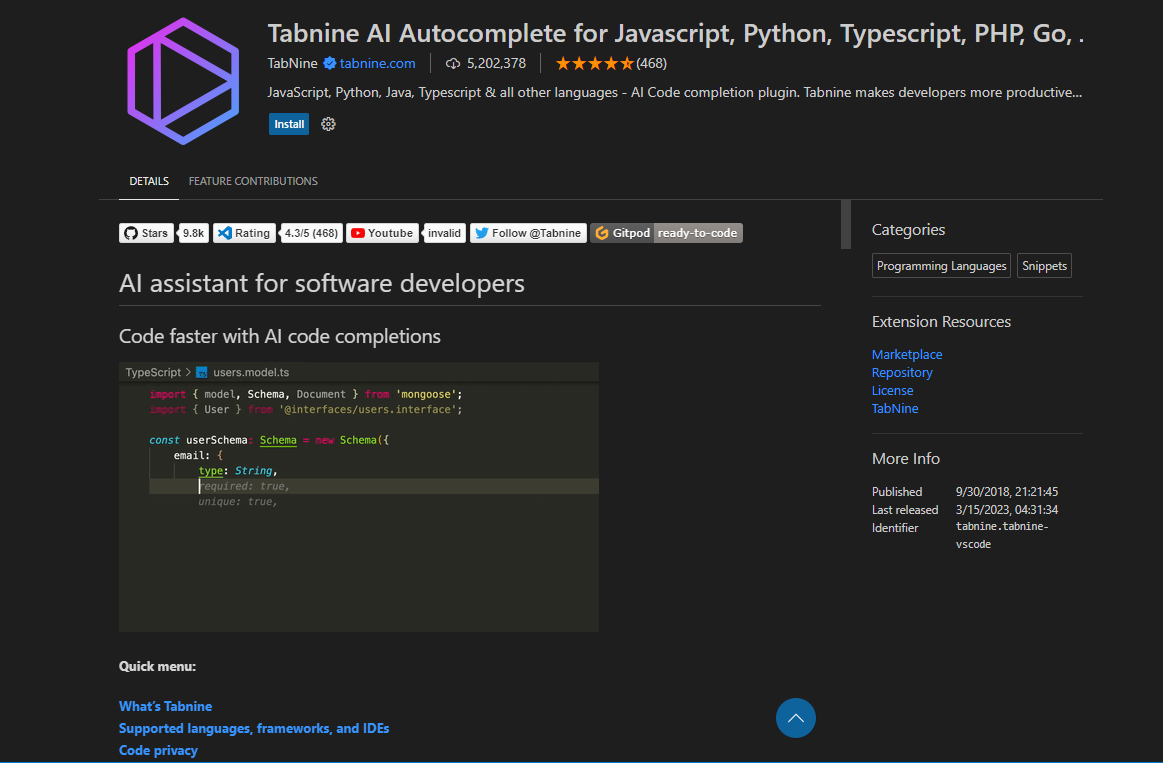

I would like to try Copilot in VS Code but I can't justify the subscription price for just fooling around

AccessBlaster

Be careful what you wish for

- Local time

- Today, 02:11

- Joined

- May 22, 2010

- Messages

- 7,595

Thanks, I might give it a try. Some of the extensions I'm using have some form of intellisense or autocomplete already.

- Local time

- Today, 10:11

- Joined

- Sep 28, 1999

- Messages

- 8,064

ChatGPT is now going to open up an app store for plugins. This is a game changer. For example, one of them will allow for ChatGPT to go to a website, retrieve the data and then act on it. e.g. provide a summary, or answers and so on. I see ChatGPT becoming a central hub where people go for everything. If they want to visit the searched site for extra information, they can. But in many cases, why would they? ChatGPT will give them the answer to anything.

It kind of raises concerns for website owners, because their hard work becomes like a hard drive of answers for ChatGPT. They will miss out on ad revenue, which then brings into question the ability of the sites to survive. No idea how this is all going to play out, but I see ChatGPT as a real headache to the likes of Google, who provided a demo of their own system recently which received mixed reception.

It kind of raises concerns for website owners, because their hard work becomes like a hard drive of answers for ChatGPT. They will miss out on ad revenue, which then brings into question the ability of the sites to survive. No idea how this is all going to play out, but I see ChatGPT as a real headache to the likes of Google, who provided a demo of their own system recently which received mixed reception.

I used it to create an EXCEL spreadsheet that that has over 200 lines of VBA in it and I didn't write one line. I did it in a few hours and it runs like a champ. I just asked it how to do something and it wrote the code for me. And not once did it ask me why I was doing it that way. Had to make a few little corrections, but it works great for VBA. But you have to be able to read the code because occasionally the way you ask a question is really important, and the resultant code can be a little off. I have 3 monitors on my rig, and CHATGTP replaced Google on the left monitor when I am writing code. Plus, spelling errors virtually don't exist.I gotta give you guys the heads up on this new piece of amazing tech. OpenAI have released the most incredible chatbot ever! It is so smart I guarantee you will be amazed. It is currently free during this phase, but will probably end up as a paid thing in the future. I recommend you sign up now and have a play.

Just go to https://openai.com/blog/chatgpt/ and click TRY CHATGPT.

To give a taster for how good it is, I am pasting my question to it and its answer. It wrote this thread title!!

View attachment 105112

You can ask it just about anything, ranging from coding questions, tutorials, recipes, and so on. Give it a go and let me know what you think.

This has been the most profound new piece of tech that I have come across since the birth of the search engine. No wonder they have over one million signups in the first week!

Isaac

Lifelong Learner

- Local time

- Today, 02:11

- Joined

- Mar 14, 2017

- Messages

- 11,499

AI expert warns Elon Musk-signed letter doesn't go far enough, says 'literally everyone on Earth will die'

Eliezer Yudkowsky, a decision theorist and artificial intelligence expert, is calling for a complete "shut down" of all AI development on systems more powerful than GPT-4, arguing it is obvious that such advanced intelligence will kill everyone on Earth.

- Local time

- Today, 04:11

- Joined

- Feb 28, 2001

- Messages

- 30,688

I disagree, Google will show you where the answer may be though links, it's up to you to decipher it.

Ai actually answers your question in the form of working code.

In the past (through ChatGPT 4.0) that has not always been the case.

- Local time

- Today, 10:11

- Joined

- Feb 19, 2013

- Messages

- 17,803

Over the last few weeks, I've been getting phone calls from AI trying to sell me life insurance. They sound very human, but 'don't listen'. If you answer one of the questions with a bit of nonsense it assumes yes or no (whichever is in their favour) and you can't ask questions.

AI: Hi - how are you today?

Me: Well, not so good, had an accident with my lawn mower and chopped my feet off, I'm currently under general anaesthetic having them sown back on.

AI: That's great! How are you covered for life insurance?

Me: How are you today?

AI: That's good to hear, I'm sure we can provide something more cost effective

and if you stay silent, it just burbles on

Still got some way to go

AI: Hi - how are you today?

Me: Well, not so good, had an accident with my lawn mower and chopped my feet off, I'm currently under general anaesthetic having them sown back on.

AI: That's great! How are you covered for life insurance?

Me: How are you today?

AI: That's good to hear, I'm sure we can provide something more cost effective

and if you stay silent, it just burbles on

Still got some way to go

- Local time

- Today, 04:11

- Joined

- Feb 28, 2001

- Messages

- 30,688

I am reminded of a scene from The Imitation Game, in which the detective is questioning Alan Turing and diverts from the homosexuality investigation to ask about machine intelligence. The summary of the discussion is that it would be unfair to say that a machine cannot think, though it WOULD be fair to say that it cannot think like a human. (After all, it ISN'T a human.) The important question is, can you say that what the machine does is NOT thinking?

In terms of AI, like anything else, we have to define terms. For instance, there is AI... and then there is an AI. We can discuss AI methods, and ChatGPT certainly qualifies there. But an AI entity must be able to not only ANSWER questions, but must be able to ASK them spontaneously. An AI has to be smart enough to be able to ask or answer questions about self-awareness. An AI needs a sufficient degree of independent thought (i.e. responses NOT directly derived from inputs) to diverge from the question. Sort of like watching a 5-year-old playing organized outdoor sports and stopping to chase a butterfly.

The Descartes statement, "I think, therefore I am" needs modification perhaps. The long-winded version of the question might be "can I ask about whether I exist? In which case does that automatically prove that I exist?" The problem with that approach is, of course, that it still casts the question in a variant of a form posed by a human. So does it ignore the idea that since it isn't a human, it forces a non-human into a human mold? Does that very question try to force a square peg into a round hole? We need to formulate a more comprehensive way of deciding that something is intelligent. The question has to include humans - preferably in a way that includes all humans as being intelligent, though given today's politics, I wonder if that is entirely true.

This question is tied deeply into another recent thread of ourse about whether humans have free will, and that question included an exploration of the idea that a human's intelligence is NOT steeped into neurons, axons, dendrites, and such, but is a 2nd order (or higher) phenomenon, a case where the whole IS greater than the sum of its parts. In other words, while we may be biologically-constructed machines, our brains are a product of something MORE than just neurons firing in a sequence. Intelligence is a result of the brain reaching and then exceeding a certain (currently unspecified) level of interconnections.

Sci-fi writers for decades have approached this problem and have seen the answer (philosophically speaking) as the product of reaching some level of complexity and brain intra-connectivity at which cyber self-awareness becomes an issue. Two examples (out of many...)

David Gerrold wrote When H.A.R.L.I.E. Was One about a machine intelligence that could talk with people and solve problems in a way that no one else could. In it, HARLIE was self-aware - and became aware that folks were trying to cut funding for his (its) project. So he learned how to blackmail people into support his project. He finagled them into building an even MORE powerful AI as part of the Graphic Omniscient Device (yep - the GOD project) for which only HARLIE could possibly be used as an interface.

Randall Garret wrote Unwise Child about a machine intelligence capable of doing research on nuclear physics, but it was self-aware and got polluted by a radical person who fed "Snookums" with religion hoping to gain revenge on someone who had wronged his family. The poor little AI went nuts trying to deal with essentially an untestable medium, the spirit world and had to be shut down.

In terms of AI, like anything else, we have to define terms. For instance, there is AI... and then there is an AI. We can discuss AI methods, and ChatGPT certainly qualifies there. But an AI entity must be able to not only ANSWER questions, but must be able to ASK them spontaneously. An AI has to be smart enough to be able to ask or answer questions about self-awareness. An AI needs a sufficient degree of independent thought (i.e. responses NOT directly derived from inputs) to diverge from the question. Sort of like watching a 5-year-old playing organized outdoor sports and stopping to chase a butterfly.

The Descartes statement, "I think, therefore I am" needs modification perhaps. The long-winded version of the question might be "can I ask about whether I exist? In which case does that automatically prove that I exist?" The problem with that approach is, of course, that it still casts the question in a variant of a form posed by a human. So does it ignore the idea that since it isn't a human, it forces a non-human into a human mold? Does that very question try to force a square peg into a round hole? We need to formulate a more comprehensive way of deciding that something is intelligent. The question has to include humans - preferably in a way that includes all humans as being intelligent, though given today's politics, I wonder if that is entirely true.

This question is tied deeply into another recent thread of ourse about whether humans have free will, and that question included an exploration of the idea that a human's intelligence is NOT steeped into neurons, axons, dendrites, and such, but is a 2nd order (or higher) phenomenon, a case where the whole IS greater than the sum of its parts. In other words, while we may be biologically-constructed machines, our brains are a product of something MORE than just neurons firing in a sequence. Intelligence is a result of the brain reaching and then exceeding a certain (currently unspecified) level of interconnections.

Sci-fi writers for decades have approached this problem and have seen the answer (philosophically speaking) as the product of reaching some level of complexity and brain intra-connectivity at which cyber self-awareness becomes an issue. Two examples (out of many...)

David Gerrold wrote When H.A.R.L.I.E. Was One about a machine intelligence that could talk with people and solve problems in a way that no one else could. In it, HARLIE was self-aware - and became aware that folks were trying to cut funding for his (its) project. So he learned how to blackmail people into support his project. He finagled them into building an even MORE powerful AI as part of the Graphic Omniscient Device (yep - the GOD project) for which only HARLIE could possibly be used as an interface.

Randall Garret wrote Unwise Child about a machine intelligence capable of doing research on nuclear physics, but it was self-aware and got polluted by a radical person who fed "Snookums" with religion hoping to gain revenge on someone who had wronged his family. The poor little AI went nuts trying to deal with essentially an untestable medium, the spirit world and had to be shut down.

AccessBlaster

Be careful what you wish for

- Local time

- Today, 02:11

- Joined

- May 22, 2010

- Messages

- 7,595

I am a fan of this technology. I believe a revolution is coming, similar to the horseless carriage vs the horse and buggy manufacturers. I feel bad for people who've invested many years in learning their craft only to be rendered obsolete overnight. Hopefully, they will embrace the future and use this tech to their own advantage.

Isaac

Lifelong Learner

- Local time

- Today, 02:11

- Joined

- Mar 14, 2017

- Messages

- 11,499

That sounds very much like an earlier Post in this thread about how useless chatbots have been for customer service on websites.Over the last few weeks, I've been getting phone calls from AI trying to sell me life insurance. They sound very human, but 'don't listen'. If you answer one of the questions with a bit of nonsense it assumes yes or no (whichever is in their favour) and you can't ask questions.

AI: Hi - how are you today?

Me: Well, not so good, had an accident with my lawn mower and chopped my feet off, I'm currently under general anaesthetic having them sown back on.

AI: That's great! How are you covered for life insurance?

Me: How are you today?

AI: That's good to hear, I'm sure we can provide something more cost effective

and if you stay silent, it just burbles on

Still got some way to go

Exactly the same. They seem to be programmed with a very small number of branches on the decision tree that any competent member I've seen on this site could do better with.. and certainly not approaching anything I would call ai.

Then again, there are probably different techniques for interacting with AI and of course it is up to the programmer to do a good job leveraging its capacities

Similar threads

- Replies

- 50

- Views

- 5,577

Users who are viewing this thread

Total: 1 (members: 0, guests: 1)