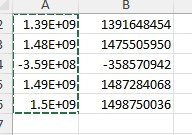

If I add a lot of records as text and then a long number saved as text, when I export the table as csv(text file), and open it in excel. the number is shown as E-notation.

The problem is that Excel defaults to "general" as the data type. If you explicitly change the column definition to text, the issue goes away.

I haven't had to do this for quite a while (several versions) so I tried it again. Excel is now completely broken and does not respect the conventional defaults for how you define a .csv file, even when you use the Data tab and specifically import a properly formatted .csv file. So, if you allow it to detect data types, it assumes numeric even for columns enclosed in quotes and therefore, all long numbers get rendered as scientific notation and the actual value is lost. Your only option is to tell Excel to not detect the data type. Then you open the workbook and mark each column yourself manually. Stupid.

Not sure who is running the show at MS but they certainly dropped the ball with this mistake. Earlier versions of Excel followed the old convention that any column enclosed in quotes was defined as text. That allowed my zip code - 06614 to be properly rendered with the leading zero because Zip is a CODE, not a number. Now, Excel can no longer distinguish based on a very simple convention that used to work.

Keep in mind that after you import the data using Data, and "do not recognize data type", you MUST manually set text to text. Excel STILL, even though you told it to import everything as text. still marks the column as General and you MUST convert it to Text or be bitten later.

When I open the .csv file with Excel, I do get this helpful message. if you don't blow by it, it will save your bacon. If you ever clicked the "don't notify me" option, you'll have to figure out how to make it prompt on every file again.