isladogs

MVP / VIP

- Local time

- Today, 23:54

- Joined

- Jan 14, 2017

- Messages

- 18,627

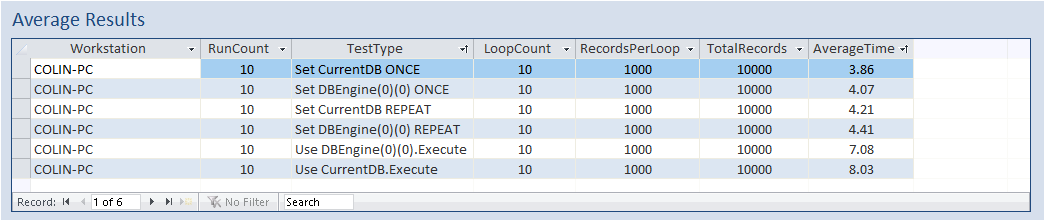

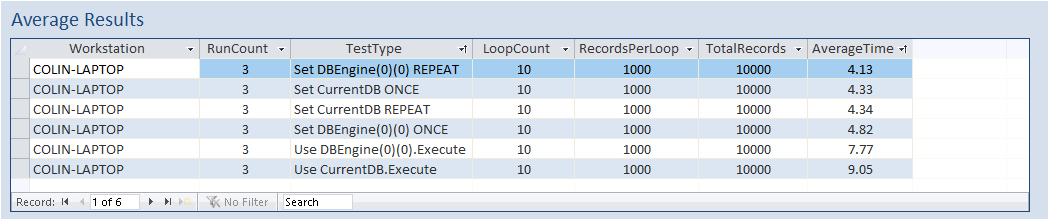

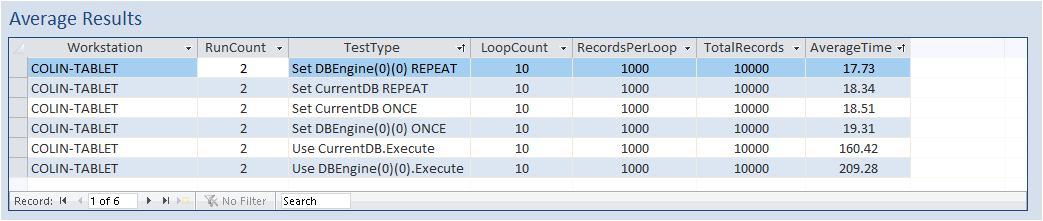

Thought it might be worth placing results from my 3 devices together

Results for each are consistent in tems of rank order but the effect of the last two tests differs

1. Desktop i5 processor 2.90GHz ; 4GB RAM ; 32-bit Access

2. Laptop i5 processor 2.60GHz ; 8GB RAM ; 64-bit Access

3. Tablet Intel Atom processor 1.33GHz; 2GB RAM ; 32-bit Access

For info, clicking the View Summary button displays the results in a report with a summary chart (example attached)

As you can see(on these tests):

a) Overall the desktop is faster than tablet despite having less RAM (though a slightly faster CPU)

b) The underpowered tablet struggles on all tests but the last two tests are dramatically different. I only ran these tests twice as they were so painfully slow

c) CurrentDB is generally faster than DBEngine(0)(0) - the opposite of what was said in the article by Jim Duttman at EE that triggered this thread.

After repeatedly trying to rubbish the results, he's finally gone quiet on the subject!

My belief is that using CurrentDB each time is slower as Access has to interpret what it means ...each time

Whereas setting db=CurrentDB at the start 'optimises' the process as it means Access has that read into memory.

A bit like using stored queries is meant to do compared to using SQL statements (though NG has been checking that & will I believe be reporting back on those tests in the near future)

As for the variation between devices, perhaps a different level of priority is put on processing tasks depending on the specifications.

So if a device is underpowered (2GB tablet) or, if I can put it like this, possibly 'overpowered' for the task like Minty's 32GB dream machine, the discrepancy is more marked

For info, the times are calculated using the system clock.

This gives output in milliseconds but I've rounded all values to 2 d.p.

The reason I've done is that the system clock updates typically 60 times per second so each individual result is only accurate to about 0.016 seconds.

For that reason, doing the tests repeatedly is necessary for greater accuracy.

For anyone interested, last year I did an example to measure reaction times that uses the same method of calculating times: https://www.access-programmers.co.uk/forums/showthread.php?t=298140

@Minty

You might also be interested in comparing different methods of pausing the processor actions - DoEvents vs Idle RefreshCache - see post 33

Results for each are consistent in tems of rank order but the effect of the last two tests differs

1. Desktop i5 processor 2.90GHz ; 4GB RAM ; 32-bit Access

2. Laptop i5 processor 2.60GHz ; 8GB RAM ; 64-bit Access

3. Tablet Intel Atom processor 1.33GHz; 2GB RAM ; 32-bit Access

For info, clicking the View Summary button displays the results in a report with a summary chart (example attached)

As you can see(on these tests):

a) Overall the desktop is faster than tablet despite having less RAM (though a slightly faster CPU)

b) The underpowered tablet struggles on all tests but the last two tests are dramatically different. I only ran these tests twice as they were so painfully slow

c) CurrentDB is generally faster than DBEngine(0)(0) - the opposite of what was said in the article by Jim Duttman at EE that triggered this thread.

After repeatedly trying to rubbish the results, he's finally gone quiet on the subject!

My belief is that using CurrentDB each time is slower as Access has to interpret what it means ...each time

Whereas setting db=CurrentDB at the start 'optimises' the process as it means Access has that read into memory.

A bit like using stored queries is meant to do compared to using SQL statements (though NG has been checking that & will I believe be reporting back on those tests in the near future)

As for the variation between devices, perhaps a different level of priority is put on processing tasks depending on the specifications.

So if a device is underpowered (2GB tablet) or, if I can put it like this, possibly 'overpowered' for the task like Minty's 32GB dream machine, the discrepancy is more marked

For info, the times are calculated using the system clock.

This gives output in milliseconds but I've rounded all values to 2 d.p.

The reason I've done is that the system clock updates typically 60 times per second so each individual result is only accurate to about 0.016 seconds.

For that reason, doing the tests repeatedly is necessary for greater accuracy.

For anyone interested, last year I did an example to measure reaction times that uses the same method of calculating times: https://www.access-programmers.co.uk/forums/showthread.php?t=298140

@Minty

You might also be interested in comparing different methods of pausing the processor actions - DoEvents vs Idle RefreshCache - see post 33

Attachments

Last edited: