My anecdotal assumption would be that the MS Windows "complexity" of implementing multitasking should actually be considered overly complex (bloated, deficient).

You DID see my comment-in-passing, did you not? "But to the government, 'heavyweight' isn't always bad because it is an excuse to buy a bigger, better machine to run that

fat pig... (excuse me, operating system) with decent response."

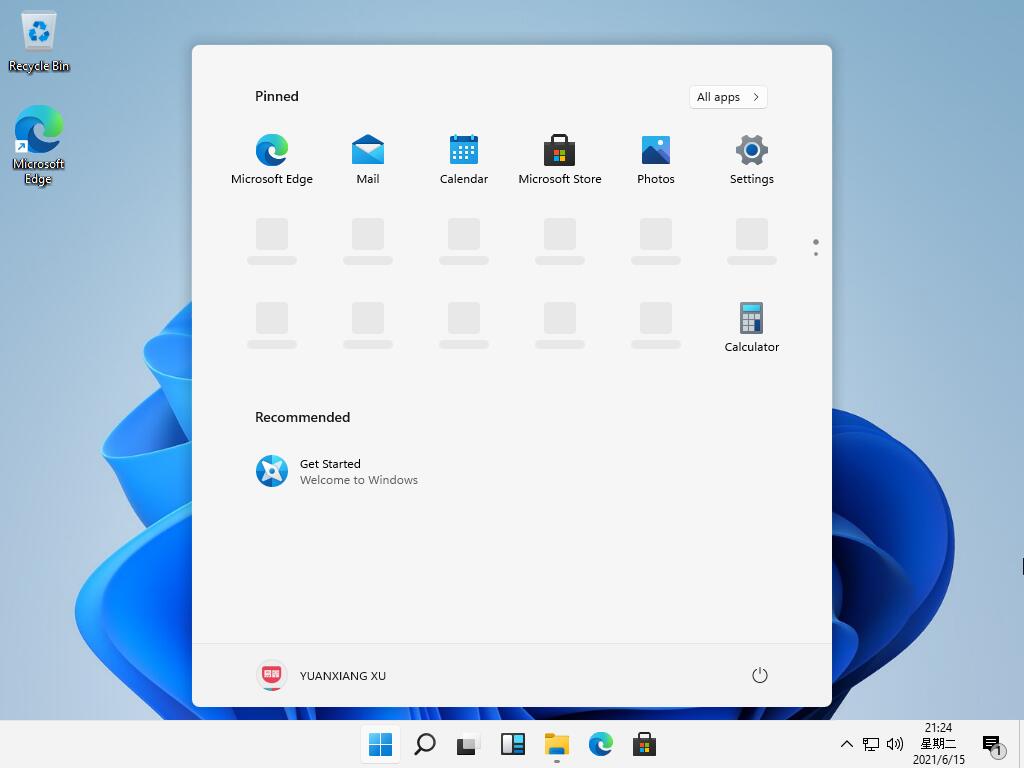

Part of Windows involves event management and the GUI that is in a different layer for UNIX machines. In fact, up to Win 3.21, DOS was the base and Windows was just a layer. Starting with WinNT, the base is Windows and the DOS (CMD) environment is the layer. At that point, a lot of the paging and swapping control merged back into the Process Scheduler. No, that is not the thing that runs batch jobs and programs at specific times. It is the thing that manages the processes attempting to share resources in the machine among competing processes.

WAY back, so long ago that most people won't remember it, computers used SWAPPING to maximize use of memory. Swapping involved writing whole memory images out to disk and then reading in different images. Slow but actually effective, particularly if you had a lot of batch oriented jobs to run. The Atlas computer (Univ. of Manchester, 1963) was the first to do PAGING - essentially, partial swapping - so that using a time-slice method plus probability-based paging, multiple tasks could be partly in memory. Now, the Windows memory dynamics model matches one that was used in 1977 for OpenVMS. In the interests of brevity, I will avoid a detailed discussion of virtual demand-paged memory with least-recently-used prioritization. But that is what Windows uses. Look up "Windows Paging Dynamics" if you get overly curious.

Clarification needed. Unix,

started out as a multitasking OS (environment) and has remained so through its (forked) evolution into Linux.

UNIX does advanced memory management too, but that was a later development. You need to re-read that article you cited. UNIX really started out as an offshoot of MULTICS - a time-sharing O/S. The project become bloated enough that Bell Labs pulled out of the project and started their own project that led to UNIX which ran on an old DEC PDP-7 (a predecessor of the PDP-11). The FIRST UNIX was not multi-processing because it was

dedicated for switching devices. It HAD to be lightweight because Bell Labs (AT&T) wanted it as a smart device controller. You see, in that time frame, industrial manufacturers started looking at software-controlled logic sequencers as a way to automate complicated steps. UNIX-like lightweight O/S implementations made it possible to use software in ways that commercial manufacturers had never considered.

I was blown away by this next fact, but

one of the earliest modern devices to be automated was ... the industrial washing machine. (

Not claiming it was the first.) The mechanical timers and complex relay sequencers gave way to computer instructions. Think about it... not less than 10 wash settings such as "Delicates" and "Wash & Wear" and "Heavy Items" - with water-temperature control, spin speed control, ... all done by a microchip and a ROM chip and a small amount of RAM for working space. Early UNIX was IDEAL for that concept. A friend of mine worked on that project and I almost went to work with him - though I found another job that was more interesting and more chemistry-oriented before he could woo me to his shop. (After all, my "Doc" IS a

chemistry PhD...)

As time passed, the open nature of UNIX led to multiple variant implementations such that by the 1990s, a pot-load of versions existed. At the time, the competition among multiple vendors was called "the Unix Wars." Eventually, UNIX "standards" fell under the Open Systems Foundation (or its predecessor) and the multiple versions of UNIX that had diverged so dramatically have begun to converge. However, at the time I retired in 2016, my department security status tracker registered 11 different flavors of UNIX that were not merely different versions of one O/S.

/cdn.vox-cdn.com/uploads/chorus_asset/file/19992183/VRG_ILLO_4030_Windows_10_Guide_001.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/16292558/windowslogo_1.jpg)