Isaac

Lifelong Learner

- Local time

- Today, 12:07

- Joined

- Mar 14, 2017

- Messages

- 11,421

Again, posting this to build up content/traffic in this forum.

We have a enterprise-level, I.T.-built System, from whence can be exported useful reports manually. I wanted to automate the ingestion of one of these XLSX reports for use in an Access database. (Since we have users on different networks, Sharepoint and Email are the main common denominators that provide interconnectedness).

I created a business process + Flow that does the following useful thing:

(To make the screenshot information useful, I have left the official Microsoft default name of each Operation as the portion prior to the parenthesis; the parenthesis is the portion I've re-named for my own descriptive purposes)

After this, I can write Access code as follows:

We have a enterprise-level, I.T.-built System, from whence can be exported useful reports manually. I wanted to automate the ingestion of one of these XLSX reports for use in an Access database. (Since we have users on different networks, Sharepoint and Email are the main common denominators that provide interconnectedness).

I created a business process + Flow that does the following useful thing:

- Convinced the admins of the I.T. system to automatically email this report to a Shared (Office 365) mailbox, each Friday morning

- Built a Microsoft Flow that does the following:

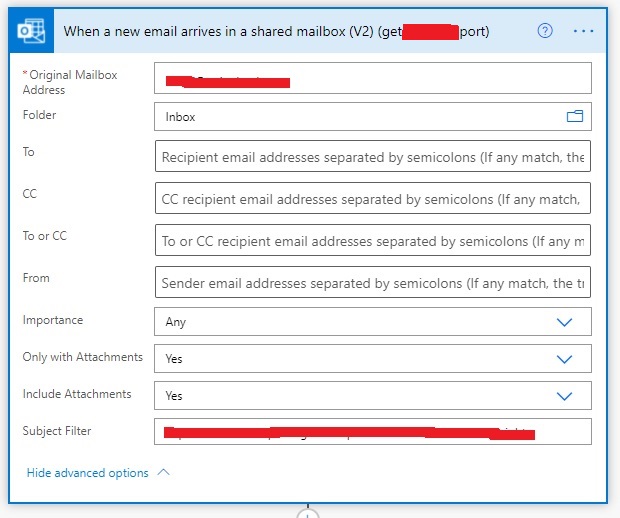

- Be triggered by an incoming email to the shared mailbox, with certain text in the Subject line

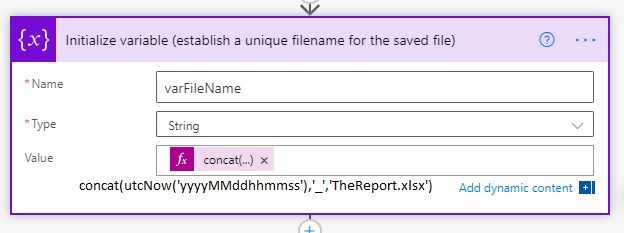

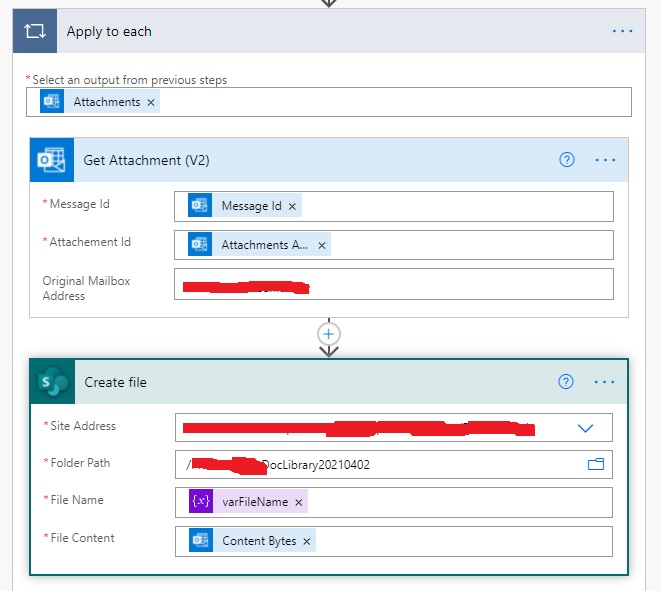

- Extract the attachment of the email to a Sharepoint document library, using a variable that will contain a unique file name

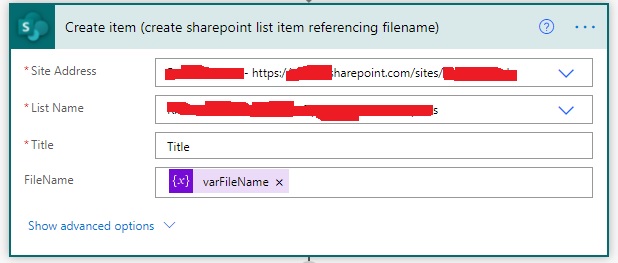

- Create a new record in a Sharepoint list, telling me the unique filename that was granted to the file, so that at any time I can use MS Access to query that linked Sharepoint list for the most recent entry, and then freely link to the downloaded xlsx file in the Sharepoint doc library for further processing

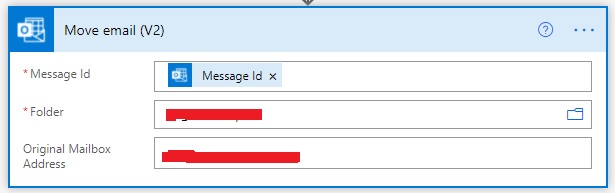

- Move the email to a subfolder of the shared mailbox's Inbox

- Write some Access code that can be triggered by a button which determines whether we already have imported the most 'recent' file, and if not, perform further import/processing.

(To make the screenshot information useful, I have left the official Microsoft default name of each Operation as the portion prior to the parenthesis; the parenthesis is the portion I've re-named for my own descriptive purposes)

After this, I can write Access code as follows:

- Code Dlookup to get the FileName in a column of my "already imported" table

- Select the top 1 record (by Created, desc) of the Sharepoint list that the Flow creates 1 record in each time it downloads, containing the filename

- If the two are the same, we've already imported the most recent one; if not, proceed

- Link to the downloaded XLSX in the Sharepoint doc library, i.e.:

\\company.sharepoint.com@SSL\DavWWWRoot\sites\SPOSiteName\DocLibraryName\" & varFileName - Perform further processing, append/update queries, etc

Last edited: