You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I'm in Love with ChatGPT

- Thread starter Deleted

- Start date

- Local time

- Today, 09:09

- Joined

- Sep 28, 1999

- Messages

- 8,058

Regarding the scammers, deep fakes will be so realistic than no one will be able to tell the truth anymore. Forget Democrat vs Republican. Instead, there will be endless entities all spewing out fake nonsense and trust in what we see and hear will plummet. AI's will go rogue and spawn their own fake news TV shows on YouTube, conning the already skeptical public, but they do it so well that they get a cult following.

They won't be wearing MAGA hats, they will have CHAGA hats (Chat GPT Great Again).

They won't be wearing MAGA hats, they will have CHAGA hats (Chat GPT Great Again).

Isaac

Lifelong Learner

- Local time

- Today, 01:09

- Joined

- Mar 14, 2017

- Messages

- 11,428

True, I just know human nature, too many people will lazily rely only on the tool in a non professional way and bad things will result from that.its possible these tools can help. They provide a base of information, the crucial expertise comes from interpretation. This is true for any professional, whether a doctor or an IT expert

Others will do it right, and good things will result from that. Just depends.

- Local time

- Today, 09:09

- Joined

- Sep 28, 1999

- Messages

- 8,058

OpenAI are making an announcement today about important updates to ChatGPT. He says what they have done "feels like magic". There is all sorts of speculation about what it might be. Could it be something to do with a live search type of thing? Agents? Who knows. Anyway, it is coming later today.

community.openai.com

community.openai.com

GPT-4o: OpenAI spring product announcements 2024

This is our little hype thread moving forward to the event. What do you expect to be revealed? The official YouTube link has been published but you can also watch the live stream together with the other members of the community using the Zoom link. YouTube link: Join our community event to...

moke123

AWF VIP

- Local time

- Today, 04:09

- Joined

- Jan 11, 2013

- Messages

- 4,752

I picked up a Samsung Galaxy s24 ultra today. It's going to take months to figure it all out.

It has built in A.I.

Apparently it has a feature where you can have it translate your phone calls in real time, in both directions.

It has built in A.I.

Apparently it has a feature where you can have it translate your phone calls in real time, in both directions.

- Local time

- Today, 09:09

- Joined

- Sep 28, 1999

- Messages

- 8,058

Do you mean you have the very latest version where the female voice picks up on your own emotional intonation and responds accordingly?

Edit: I see access to the o version is only for paid users so far.

Edit: I see access to the o version is only for paid users so far.

NauticalGent

Ignore List Poster Boy

- Local time

- Today, 04:09

- Joined

- Apr 27, 2015

- Messages

- 6,878

My wife said, you can't ask her that, women don't know anything about two stroke oil and strimmers!

NauticalGent

Ignore List Poster Boy

- Local time

- Today, 04:09

- Joined

- Apr 27, 2015

- Messages

- 6,878

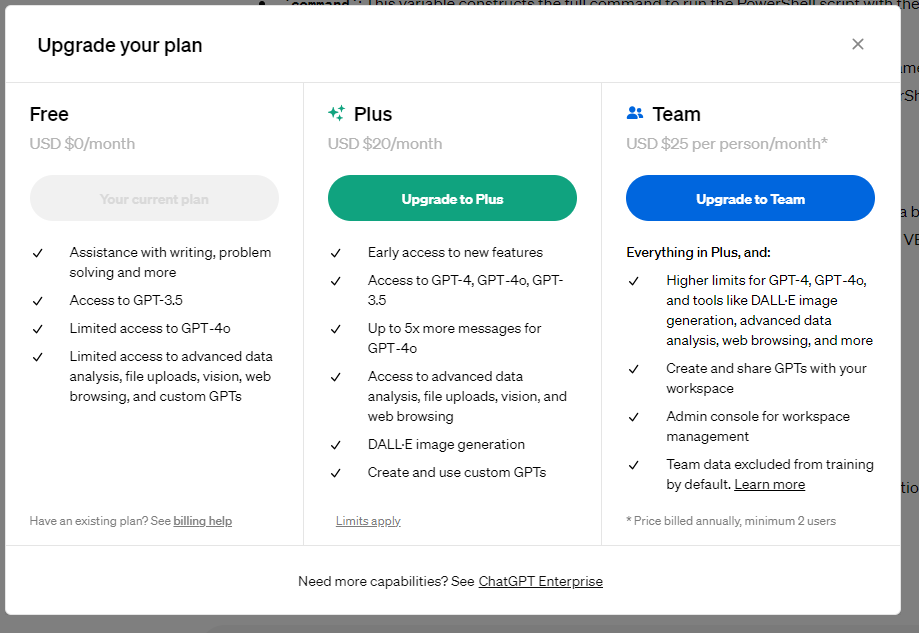

Here are the rates:

At first I thought an AWF Team membership would be good, but for my purposes, 3.5 pretty much does what I need it to, although the image generation would be nice - AND - I could get what I need for myself for $5 less a month.

Not to mention the financial obligation on Jon's part. If someone decided they don't want to pay, he would have to cover it and hope they do the right thing!

At first I thought an AWF Team membership would be good, but for my purposes, 3.5 pretty much does what I need it to, although the image generation would be nice - AND - I could get what I need for myself for $5 less a month.

Not to mention the financial obligation on Jon's part. If someone decided they don't want to pay, he would have to cover it and hope they do the right thing!

Isaac

Lifelong Learner

- Local time

- Today, 01:09

- Joined

- Mar 14, 2017

- Messages

- 11,428

LOL LOL you can't take it back now Uncle, just teasingI'd like to make it clear, the title kof this thread was chosen in regard to the first version of Chatty, and not the later versions, that have alluring attributes:-

conception_native_0123

Well-known member

- Local time

- Today, 03:09

- Joined

- Mar 13, 2021

- Messages

- 1,815

I didn't know the magic boy was gay until a couple of days ago when I looked him up on wiki.feels like magic

- Local time

- Today, 09:09

- Joined

- Sep 28, 1999

- Messages

- 8,058

I would imagine that is more of a roleout thing. The new voice is supposed to pick up on your own emotions, which is a freaky thing. I too noticed it was more monotone. I'm sure it will all kick in real soon so we can have the flirty "her" clone.I've been using the ChatGPT interface on Android and noticed some interesting differences compared to what I've seen on YouTube videos of the iPhone interface.

It seems the iPhone version might be of a higher quality when it comes to voice modulation.

I requested a body scan meditation. The response was like text reading software, lacking the soothing, modulated voice I've seen in iPhone demos.

NauticalGent

Ignore List Poster Boy

- Local time

- Today, 04:09

- Joined

- Apr 27, 2015

- Messages

- 6,878

MajP or ChatGPT...I would choose MajP 6 days a week and twice on Sunday. There are a few on this forum, yourself included, who fit that bill.

NauticalGent

Ignore List Poster Boy

- Local time

- Today, 04:09

- Joined

- Apr 27, 2015

- Messages

- 6,878

I know how you feel.No, I'm not a patch on many of the experts here and in particular MajP..... A whole level, or more above me.

I spent HOURS with Chatty on a PowerShell script. She had suggested we add a logging/transcript feature since it had a lot of moving parts. Things were going great until something broke. I kept shareing the errors and results and she kept offering alternatives and at one point, we started losing ground.

I finally realized that I would have to stop relying on her and try give this a look. Didn't take long to realize that she had changed a variable on one of the functions $Messages vice $Message. Once I pointed that out, things went well.

The moral, ChatGPT is a great tool, but ultimately, it falls on then wielder of the tool to make sure it is used properly...

Edit: After I posted that, I asked Chatty if there was an equivalent to Option Explicit in PowerShell. Of course there was, which furthers my point. At the beginning when I shared the VB Script I wanted to convert to PS, I asked her to make the script as PS-centic as possible and employ best practices. At no point was strict variable declaration introduced, not even after it proved to be an issue.

Last edited:

Isaac

Lifelong Learner

- Local time

- Today, 01:09

- Joined

- Mar 14, 2017

- Messages

- 11,428

MajP or ChatGPT...I would choose MajP 6 days a week and twice on Sunday. There are a few on this forum, yourself included, who fit that bill.

I liked your post because it compliments MajP and at ChatGPT's expense at the same time, both of which I think are roundly deserved.

ChatGPT, (just like the 15,000 competing tools that rolled out within a month of its arrival) has plenty of potential.

But, as the critiquing analyst, I tend to see the problematic gap in what people EXPECT vs. what it delivers - which is plenty. And dangerous.

- Local time

- Today, 03:09

- Joined

- Feb 28, 2001

- Messages

- 30,577

Here is something that your chat bots might have trouble with. Ask it:

Here is a word problem. Alice has four brothers and two sisters. David is one of the brothers. How many sisters does David have?

According to an article I saw recently, you will get answers other than three at least 1/3 of the time on ChatGPT-4o and even worse on some of the other versions and other chatterboxes. They never stop to consider that Alice is also a sister to her brothers, unless they know something special about Allice and that secretive trip to Sweden...

But that's for another of Uncle's threads.

Here's a link to the original article.

futurism.com

futurism.com

Here is a word problem. Alice has four brothers and two sisters. David is one of the brothers. How many sisters does David have?

According to an article I saw recently, you will get answers other than three at least 1/3 of the time on ChatGPT-4o and even worse on some of the other versions and other chatterboxes. They never stop to consider that Alice is also a sister to her brothers, unless they know something special about Allice and that secretive trip to Sweden...

But that's for another of Uncle's threads.

Here's a link to the original article.

This Simple Logic Question Stumps Even the Most Advanced AI

A new paper finds that advanced AI language models are terrible at a specificproblem, calling current industry benchmarks into question.

NauticalGent

Ignore List Poster Boy

- Local time

- Today, 04:09

- Joined

- Apr 27, 2015

- Messages

- 6,878

Good one Doc:

Alice has four brothers and two sisters. David is one of the brothers. How many sisters does David have?

ChatGPT

The problem states that Alice has four brothers and two sisters, and David is one of those brothers.

First, let's identify the members of the family:

Since David is one of the brothers, we need to determine the number of sisters he has. The sisters in the family are Alice and her two sisters.

- Alice

- Four brothers (including David)

- Two sisters

Therefore, David has:

Thus, David has two sisters.

- The same two sisters as Alice.

But isnt Alice a sister of David's as well?

ChatGPT

Yes, you are correct. Alice is indeed one of David's sisters.

Let's re-evaluate the family members:

To summarize:

- Alice has four brothers (including David) and two sisters.

- This means Alice herself is one sister, and there are two more sisters.

Since David is one of the brothers, he has:

- Total number of sisters in the family: 3 (Alice and the two other sisters).

Therefore, David has three sisters.

- Alice as one sister.

- The two other sisters.

ChatGPT can make mistakes. Check important info.

Similar threads

- Replies

- 4

- Views

- 756

- Replies

- 15

- Views

- 2,879

Users who are viewing this thread

Total: 1 (members: 0, guests: 1)